Opinion: The crisis of thinking in AI age

We should not fear a machine that thinks. We should fear becoming people who do not

By Nikhil Reddy

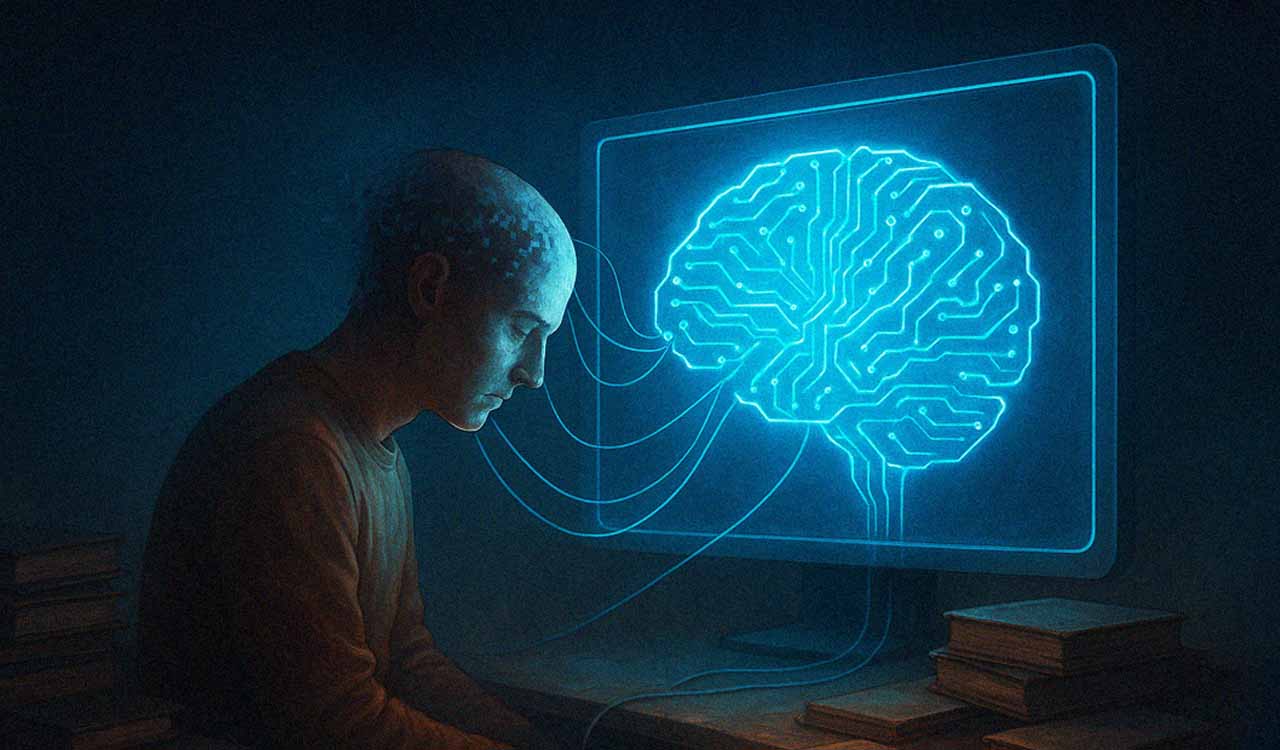

Artificial intelligence is transforming not only what we know but how we know. Its arrival in classrooms and offices was not dramatic but stealthy. One day, students were highlighting PDFs and wrestling with readings. Then suddenly they were feeding the same material into chatbots that could summarise, interpret, and even reflect. The line between understanding and outsourcing blurred quietly, almost painlessly. We congratulated ourselves for embracing efficiency, telling a comforting story about “assistance.” In truth, we may have welcomed a substitute for the very mental struggle that once defined learning.

Heidegger’s Warning

The philosopher Martin Heidegger argued that technology is never just a tool but a way of understanding the world. That now feels uncomfortably prophetic. AI does not simply execute commands; it configures how we interpret knowledge itself. Each prompt and polished reply creates a shortcut in cognition. The more we rely on those shortcuts, the less we notice the terrain we no longer cross. The gain in efficiency hides a loss of engagement. Machines do not merely answer our questions; they begin to shape how questions arise at all. When we allow them to mediate our thinking, we slowly acclimate to seeing the world through their logic rather than our own.

Heidegger warned that technology develops its own momentum, quietly reorganising society and thought. The danger, he wrote, is not destruction but forgetfulness, and the erosion of deeper forms of understanding that require time, struggle, and patience. That warning once applied to industrial machinery; today, it fits the digital classroom with unsettling precision. The problem with AI is not its capability but what it quietly teaches us to stop doing.

Irony-laced Reassurances

History offers its irony-laced reassurances. Plato feared writing would destroy memory, arguing that written words created only the appearance of wisdom. He was both right and wrong. Writing did weaken memory, yet it preserved ideas that would have otherwise vanished. Plato survives because he did the very thing he warned against. Every technological leap offers this dual legacy. The printing press spread literacy while contracting oral tradition. The internet democratised information while pulverising attention spans. Now AI promises comprehension without effort, and the price may be our capacity for reflection.

Tools have always extended human thought. Clay tablets, scrolls, and typewriters, each expanded the mind’s reach without replacing the mind’s work. AI feels different because it edges into reasoning itself. It does not merely record or distribute knowledge; it simulates the process of arriving at it. Its outputs look complete, confident, and deceptively insightful. That ease tempts us into mistaking fluency for understanding. At that moment, thinking becomes theatre.

You can see the consequences in the modern classroom. I read essays that are immaculate yet oddly frictionless. Arguments unfold with a mechanical balance, untouched by doubt or discovery. They feel like knowledge pre-assembled for consumption, missing the texture of uncertainty that marks genuine thought. Students are not interrogating theories so much as prompting them. The result is a polite, polished, and hollow intellectual culture that values answers over inquiry.

Socrates warned of those who believe they know when they do not, and AI accelerates this illusion by making information feel like insight

Philosopher and psychologist John Dewey described critical thinking as a pause between stimulus and response, a form of reflective thought that resists haste. True learning, he believed, begins with doubt and delay. Today, the pause has been replaced by a prompt box. Time once spent wrestling with an idea dissolves into rephrasing the same request until the machine returns something that “sounds right.” Haste, once a barrier to thought, is now a feature of it.

French philosopher Jacques Derrida wrote that every medium reveals and conceals, and worried that writing froze living thought into fixed marks. Yet even he could not have anticipated AI’s leap, a system that does not externalise thinking but instead manufactures the illusion of it.

Erosion of Effort

This is the core danger: not misinformation or malfunction, but the erosion of effort. AI seduces us into accepting coherence as comprehension. It builds arguments, connects citations, and fashions interpretations in seconds. What disappears is the struggle that makes ideas durable. Human minds learn through friction, not fluency. When machines remove friction, curiosity, and scepticism quietly atrophy. Some will insist that calculators did not destroy arithmetic, and the internet did not destroy reading. But calculators do not pretend to think for us. AI does. A calculator accelerates calculation; it does not write proofs. AI writes proofs, essays, explanations. It outputs conclusions, and conclusions are where thought stops.

Philosophers have long linked intelligence to imagination. Another French philosopher, Michel Serres, noted that we understand abstraction through examples but warned that when examples dominate, thought collapses into simplicity. AI thrives on examples. It reduces ambiguity into digestible templates. Ask it about justice, and it returns a brisk, tidy definition. What it cannot do is dwell in ambiguity, sit in uncertainty, or wander toward insight. Its design rewards clarity, not complexity.

False Confidence

Simplification has its virtues, since it lowers barriers to knowledge. But oversimplification breeds false confidence. The real threat is not ignorance but its mimicry. Socrates warned of those who believe they know when they do not. AI accelerates this illusion by making information feel like insight. We read a neat paragraph and feel enlightened, when we have merely skimmed the surface.

This flattening of thought extends beyond education. Public discourse was already battered by social media’s compression of nuance. Now AI can generate convincing certainty on demand. Complexity collapses into slogans. Debate becomes the repetition of plausible language rather than the testing of ideas. We move from conversation to simulation of conversation. Yet this trajectory is not inevitable. AI can expand rather than dull thought. It can surface patterns, challenge assumptions, and scaffold inquiry. Used wisely, it frees time for interpretation and reflection. That requires treating AI not as an oracle but as a tool whose value depends on our scrutiny.

Information is data arranged in language; knowledge is data tempered by doubt. We can choose to use AI without surrendering thought. That means questioning its claims, interrogating its elegance, and remembering that learning is not a product to retrieve but a process to endure. Technology has always tempted us toward shortcuts. The moral task is not to reject the shortcut but to remember what the long road cultivates.

The danger is not artificial intelligence. The danger is artificial understanding. AI can generate language that sounds thoughtful. Only we can do the harder work of thinking. If we abandon that work, the world will not collapse dramatically; it will thin quietly. Thought will fade not in crisis but in convenience. We should not fear a machine that thinks. We should fear becoming people who do not.

(The author is a PhD candidate in Mass Communication at Ohio University)

Related News

-

Telangana HC halts Ibrahimpatnam Municipal Council election process

4 mins ago -

Telangana government cuts fees for private engineering colleges

7 mins ago -

BRS leaders approach SEC seeking action against Congress atrocities in civic polls

9 mins ago -

Girls to get location benefit in Telangana EAPCET centre allotments

18 mins ago -

Man murdered by wife for forcing her into prostitution in Mancherial

29 mins ago -

Burgampad police arrests two for attempting to kill their friend to claim insurance money

33 mins ago -

Married woman attacked by widower in Mahabubabad with petrol

36 mins ago -

Medak District Collector sentenced to six months in jail

43 mins ago